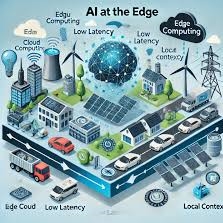

Every second counts when a self-driving car speeds down a road or a drone flies through a busy city. Edge AI, which runs AI directly on these devices instead of sending data back and forth to cloud servers that are far away, is cutting latency by a huge amount, making lightning-fast judgments possible.

The most important thing is *processing data locally*. In the past, sensor inputs like LiDAR, radar, cameras, and GPS would go to cloud servers far away for analysis before instructions were sent back. This back-and-forth can take tens or even hundreds of milliseconds, which is a significant delay when a car has to stop rapidly to avoid hitting a person or a drone has to fly away swiftly. Edge AI lets the vehicle or drone do calculations on its own, which lets it see and react right away, which *greatly* cuts down on lag.

This configuration is *very effective* because processing happens right on the device or on edge servers nearby, avoiding network problems, delays, and spikes in latency. This very low latency is *especially helpful* for autonomous navigation, when even a split-second pause might spell disaster. By putting AI-driven insights into robots on their own, they get reactions that are virtually like those of a human and are *very reliable* and far safer.

Cutting latency also fixes problems with bandwidth. Sending raw sensor data to the cloud all the time is not practicable and costs a lot. Instead, edge devices look at data right away and only send warnings or short summaries of useful information. This *greatly eases the load on the network*, freeing up bandwidth for important tasks and lowering costs.

This local processing also helps with security and privacy. Self-driving cars collect huge amounts of private data. Keeping data on-site *naturally* lowers exposure risks, which lowers the likelihood of interception and helps businesses follow strict data protection requirements. This “localized containment” builds confidence without slowing down or breaking the system.

Edge AI’s success is based on a number of technologies:

– **Optimized lightweight AI models**, such as TensorFlow Lite and ONNX Runtime, *run sophisticated algorithms efficiently* on devices with limited resources.

– Hardware accelerators like Google’s Coral Edge TPU and NVIDIA Jetson modules *cut inference times in half*, making real-time AI possible at the edge.

– **Strong edge architectures** that can handle changing workloads make sure that solutions can grow as more autonomous fleets are added.

Edge AI’s ability to change the game is already being shown by leaders in the field. Self-driving cars often use onboard edge computing and 5G connectivity together to reduce latency even more and make the cars more responsive. Drones that deliver packages and watch over things can change their flight patterns in real time without having to go back to the cloud. These improvements not only make things safer, but they also open up new ways to use things that were previously limited by latency issues.

We are entering a “edge-first” era, where autonomous robots will be able to make decisions quickly, reliably, and intelligently. This change will save lives, cut costs, and make more things feasible in automation and mobility.

—

**Why Edge AI’s Latency Cut Is Important for Drones and Self-Driving Cars:

1. **Split-second responsiveness:** This lets you find and respond to dangers right away, which is important for safety.

2. **Network resilience:** Works perfectly even when the connection is poor or spotty.

3. **Saving bandwidth:** Sending less data costs less and makes the network less busy.

4. **Data protection:** Keeps sensitive information in one place, which improves privacy and compliance.

5. **Scalable operation:** Works with fleets that are getting bigger without slowing down in the cloud.

6. **Advanced autonomy:** Makes complex judgments closer to sensors, which increases the level of automation.

Putting intelligence close to sensors turns self-driving cars and drones into *incredibly versatile*, innately aware agents that can navigate difficult terrain with quick reflexes, almost like a swarm of bees. This method is making way for a “safer, smarter autonomous future” that will change the way machines navigate around our environment.